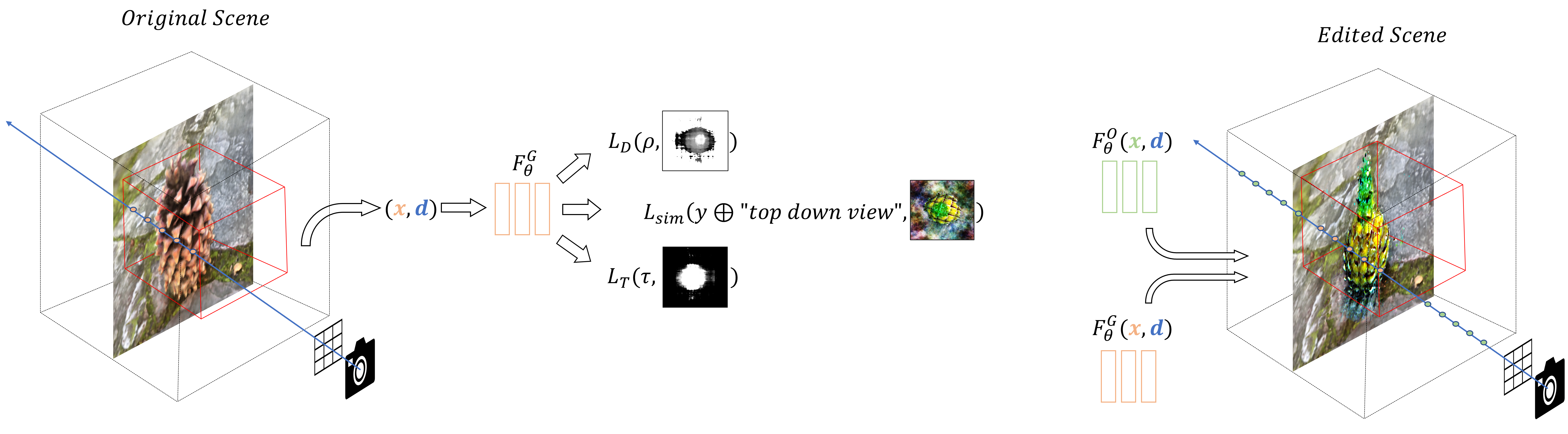

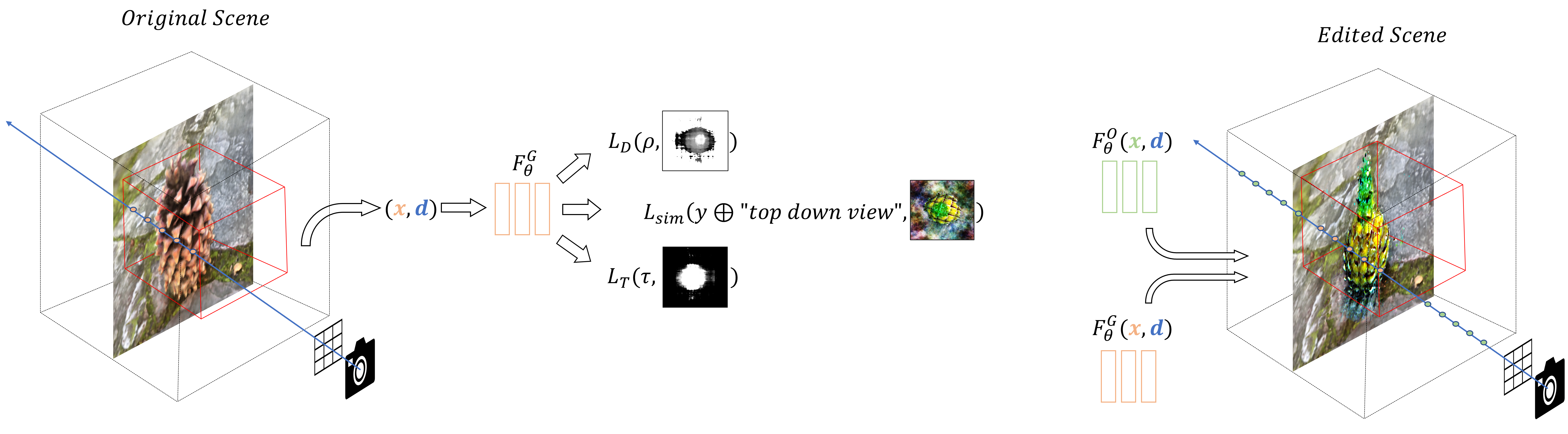

Editing a local region or a specific object in a 3D scene represented by a NeRF is challenging, mainly due to the implicit nature of the scene representation. Consistently blending a new realistic object into the scene adds an additional level of difficulty. We present Blended-NeRF, a robust and flexible framework for editing a specific region of interest in an existing NeRF scene, based on text prompts or image patches, along with a 3D ROI box. Our method leverages a pretrained language-image model to steer the synthesis towards a user-provided text prompt or image patch, along with a 3D MLP model initialized on an existing NeRF scene to generate the object and blend it into a specified region in the original scene. We allow local editing by localizing a 3D ROI box in the input scene, and seamlessly blend the content synthesized inside the ROI with the existing scene using a novel volumetric blending technique. To obtain natural looking and view-consistent results, we leverage existing and new geometric priors and 3D augmentations for improving the visual fidelity of the final result. We test our framework both qualitatively and quantitatively on a variety of real 3D scenes and text prompts, demonstrating realistic multiview consistent results with much flexibility and diversity compared to the baselines. Finally, we show the applicability of our framework for several 3D editing applications, including adding new objects to a scene, removing/replacing/altering existing objects, and texture conversion.

@article{gordon2023blended,

title={Blended-NeRF: Zero-Shot Object Generation and Blending in Existing Neural Radiance Fields},

author={Gordon, Ori and Avrahami, Omri and Lischinski, Dani},

journal={arXiv preprint arXiv:2306.12760},

year={2023}

}